Le Dernier Rétro

11 May 2020 • 7 min read • Agile, Retrospective

In this post, I will share my experiences on how we measure the effectiveness of our biweekly retrospectives and how we improved the outcome of it in a data-driven way.

Eva Aeppli - Group of 13, Centre Pompidou Málaga © matchilling

Eva Aeppli - Group of 13, Centre Pompidou Málaga © matchilling

Continuous improvement

We should always strive for constant improvement and growth. Regardless of the agile methodology flair which we are applying in our projects, retrospectives and reflections are central to the idea of continuous improvement and therefore, fundamental building blocks.

They, when done well, are excellent tools which enable us to learn from past experiences, to document mistakes, to avoid them in the future hopefully and finally to increase our potential to grow by establishing a continuous improvement cycle.

According to the 13th Annual State Of Agile report from 2019, 98% of all the survey respondents state that their organisations follow agile development practises. So the chances are high that you’re likely to be familiar with agile methodologies and that retrospectives form already part of your regular day-to-day work routines.

Adhering retrospectives to routines enables us to be more efficient; it reduces the need for planning and instils good habits in general. But doing so also carries the risk to fall into a lethargy which can have a deadening effect by failing to examine our environment actively.

Repeating the format, in which you run your retrospectives and always asking the same questions will most likely turn this meeting into a tedious ceremony after a few iterations which will most likely lead to a decreasing energy level and reduced overall participant engagement. A related issue is a case where the team revisits the same problems over and over again - it’s like groundhog day, but without the feel-good ending.

Quick psychological excursion: the continuous repetition of a word, eventually leading to a sense where the word has lost its meaning is a psychological phenomenon called Semantic satiation. A great example, how this technique can be used in art is Steve Reich’s pioneering work in experimental music back in the 1960s.

It’s just for illustration purpose, and indeed I’m terribly exaggerating when I compare the above example with occasional monotonous ceremonies. Still, I’m pretty sure every one of you remembers those meetings where it was hard keeping the attention high and struggling not to fall asleep.

So what are effective counter-actions to avoid boring retrospectives?

One of the most effective counter-actions to prevent retrospective boredom is to change the format over time and not to repeat the same exercise twice. Your team could, for example, create a retrospective exercise repository to store and collect ideas to reduce the planning overhead for the moderator. In fact, another useful technique is to rotate on the facilitator role to keep things moving.

I’ll not go into much more details as there are tons of online resources available which discuss ways of improving retrospectives in great length and can serve as an inspirational starting point. Some links and further resources are listed at the end of this article. One question though remains …

How do you know which format works?

First of all, there is no golden rule or guarantee which type of retro will work better and eventually improve the overall outcome for your particular team in your specific organisation. Why? Because we’re all individuals with different educational and cultural backgrounds, who behave and react differently to an external stimulus.

While having diverse opinions and lively conversations on the subject matter is absolutely desirable, it makes it on the other hand difficult to predict what retrospective fits best your particular circumstances.

Still, we can learn from other teams and organisations and try new things out in a continuous improvement manner. But first and foremost, the number one recommendation is, do what works for your team!

How do you measure the success of your retrospective?

Every retrospective should be outcome-focused and should lead to actionable tasks; avoid the do-nothing retro! By the way: this holds for all types of meetings. So, once the team has agreed on trying a new idea, a team member should be assigned to this task and should be accountable for the execution of it so that you can later follow up on the process.

Now as the retro is part of the whole agile project lifecycle, potential performance metric candidates are the classic velocity or cycle time. These are excellent tools, and you should use at least one of these techniques to benchmark the project progress. However, many parameters can alter the overall result, and you can’t always tell which of them were derived by the retrospective. Therefore, I like to focus on a more narrow-scoped metric to be able to compare the outcome of retrospectives over time.

Number of SMART actions

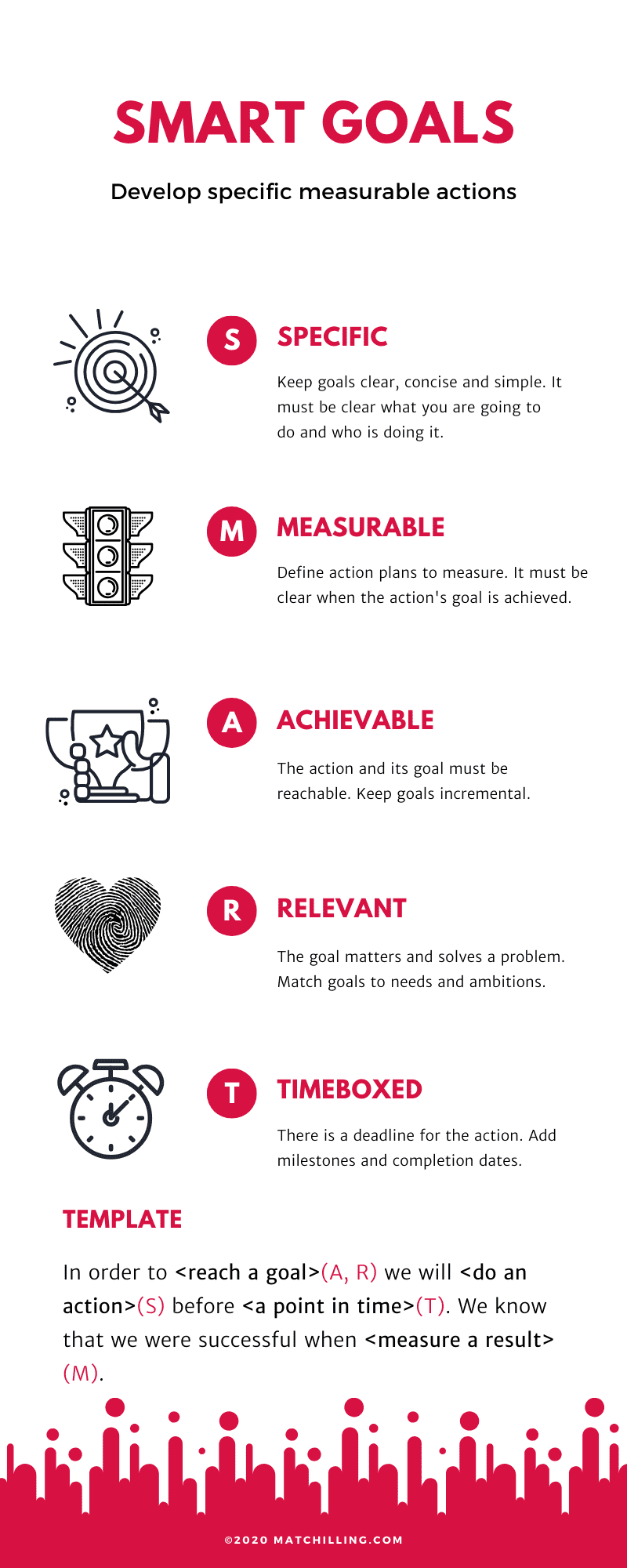

One quantitative metric for determining the effectiveness of a retrospective is to just count the number of Specific, Measureable, Achievable, Relevant, Time-boxed actions.

Rather than going into huge details, I let the following infographic explain the five attributes your actions should follow:

SMART goals infographic © matchilling - Download PDF version

SMART goals infographic © matchilling - Download PDF version

If you’re curious about the SMART goal technique, you can find more details in the literature; Derby, Larson et al. have discussed the topic extensively.

But let’s move on; now that we have an understanding of what SMART actions are, we should ask ourselves how we can increase the number of them as a positive outcome from our retrospectives.

According to the recommendation made earlier, my hypothesis was that higher engagement would lead to more SMART tasks.

To prove or disprove my hypothesis, I revisited all our past retrospectives and collected the following data points:

- Date of the retro

- Retro format

- Number of participants

- Number of cards posted, classified by sentiment (positive, neutral, negative)

- Number of actions created

Here is an extraction from the data set:

| Date | 5th May | 21st Apr | 7th Apr | 24th Mar | 10th Mar |

|---|---|---|---|---|---|

| Number of Participants | 8 | 9 | 9 | 9 | 9 |

| Number of Cards posted | 42 | 26 | 22 | 23 | 26 |

| Positive | 19 | 11 | 12 | 9 | 17 |

| Neutral | 8 | 4 | 5 | 7 | 3 |

| Negative | 15 | 11 | 5 | 7 | 6 |

| Number of Actions created | 5 | 3 | 3 | 3 | 2 |

With these data points on hand, I’ve calculated a range of averages:

| Date | 5th May | 21st Apr | 7th Apr | 24th Mar | 10th Mar |

|---|---|---|---|---|---|

| Avg. Cards/ Participant | 5.25 | 2.89 | 2.44 | 2.56 | 2.89 |

| Avg. Actions/ Participant | 0.63 | 0.33 | 0.33 | 0.33 | 0.22 |

| Cards/ Actions Ratio | 8.40 | 8.67 | 7.33 | 7.67 | 13.00 |

An interesting observation which we can spot in the above table is that no matter how many cards and action items are being created the ratio between them averages almost out over time. The 95th percentile of the ratio over the whole data set (not only the five shown above) is around eight, meaning one action is being created for every eight cards.

This sounds quite logically; more cards will inevitably lead to more actions. So given this direct correlation, the question is, how can we get the participants to create more cards? And off course, we don’t wanna create them just for the sake of it, they should obviously be meaningful.

As you might have guessed, the answer to it lies in the level of engagement and the advice mentioned earlier to change the format of your retrospective frequently.

| 5th May | 21st Apr | 7th Apr | 24th Mar | 10th Mar |

|---|---|---|---|---|

| Experiment | Standard | Standard | Standard | Standard |

As you can see in the table above, we run an experiment on the 5th of May, including just a small change from our standard retrospective format. This, in turn, had a positive impact and led to a ~60% increase in cards and actions created.

What did we change?

Hint: the clue is in the blog post title … We usually use a standard version of the 3Ls (Liked – Learned – Lacked) for our retros. But this time, we asked the participants a slightly different question. Rather than narrowing down the scope to the last sprint iteration, we asked them: “Assuming this is the last retrospective of the project, name things that you’ve liked, learned or that you’ve missed.”

It was interesting to witness that just this tiny little change had such a positive impact on the meeting outcome. Now you can’t ask this question for the apparent reason at every retrospective, but that’s not the point. The purpose of the experiment was to introduce just a small new stimulus to enable the team to change the way we would think about a given problem.

Conclusion

So what’s in there for you? Like said before, there is no golden rule, and nobody outside of your team can tell you what works best for your circumstances. However, I strongly encourage you to experiment with different formats and introduce little changes here and there from time to time to keep people’s engagement high and to establish a healthy environment for constructive discussions.

Further resources

- Agile Retrospectives: Making Good Teams Great by Esther Derby and Diana Larsen.

- SMART Goals: A How to Guide from the University of California

- Retromat Free resource with thousands of activities for your next retrospective